Architecture¶

Overview¶

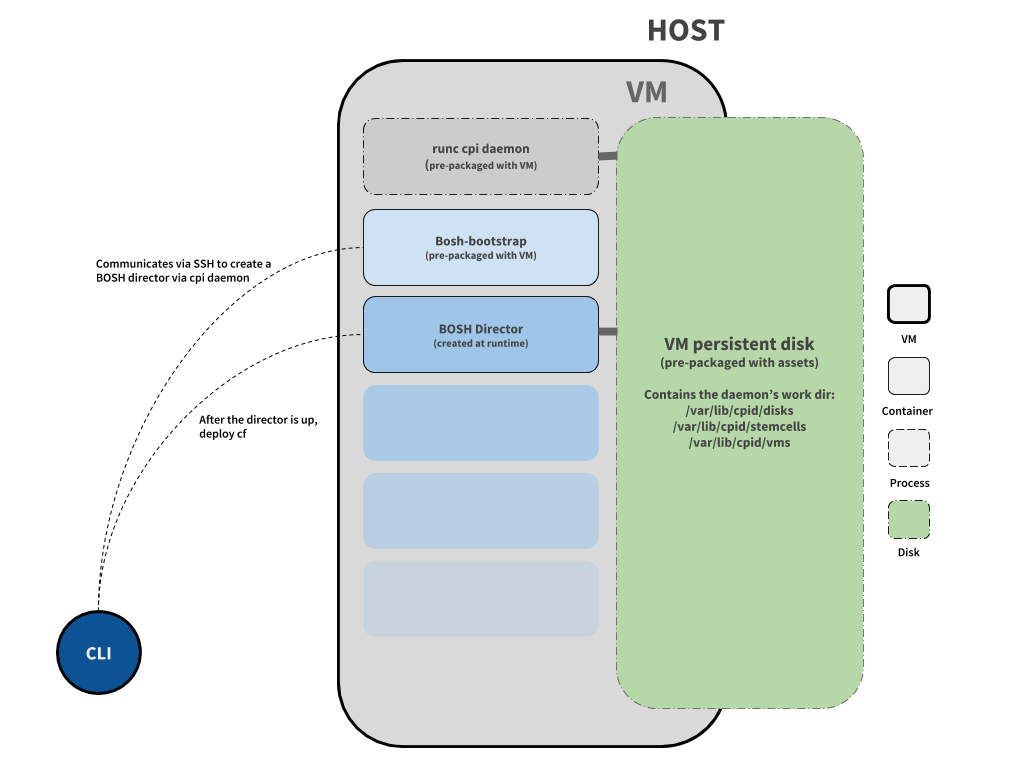

The architecture of CF Dev is much like that of BOSH Lite. In both cases a VM is stood up with a process that satisfies the BOSH Cloud Provider Interface - deploying containers instead of VMs. However, instead of running a VM on top of Virtualbox, CF Dev runs a VM on top of native hypervisors (like Docker Machine). This also means that there are more differences among CF Dev's support for different OS platforms than BOSH Lite requires.

The plugin code itself mainly deals with orchestrating VM spin up and teardown and invoking BOSH deploys. Once the VM is up and running, the plugin invokes a BOSH deployment that will stand up a director, and subsequently Cloud Foundry. The bosh deployments happen on a user's host machine via a packaged BOSH cli, but the deploy-bosh-director step happens inside a container of the boot iso itself. This is because the bosh create-env step can only happen inside a linux environment.

BOSH Lite vs CF Dev

| BOSH Lite | CF Dev | |

|---|---|---|

| Container Runtime | runc | runc |

| Container Manager | Garden | runc-daemon |

| BOSH CPI | warden-cpi | runc-cpi |

| VM Runner | VirtualBox | HyperKit (darwin), HyperV (windows), KVM (linux) |

| VM Builder | - | linuxkit |

| VM Format | OVF | EFI ISO |

The CPI being utilized in CF Dev is the runc-cpi. In general, the tool is a BOSH Cloud Provider Interface that interacts with a runc-daemon (same codebase). This daemon being a gRPC server that can invoke the runc binary to create containers. This CPI was built with generic use cases in mind but was still originally built for CF Dev.

Why runc-cpi?

The reason that runc-cpi/runcd is preferred over warden-cpi/garden-runc is because the dependencies that Garden requires before starting are non-trivial and linuxkit builds an extremely small, bare VM. The only way to run Garden within a linuxkit built VM is to run it inside a container itself, which added performance and mental overhead. The runc-daemon only depends on tar and runc being on the $PATH and thus can run on the bare filesystem of the VM.

Why linuxkit?

To be like Docker.

Does CF Dev have an OpsManager?

CF Dev does not have an OpsManager; it uses unadorned BOSH under-the-hood. However a variant of OpsManager is used the PCF CI build process. It is modified to use the runc-daemon and to be able to perform a dry-run deploy. The effect is that we can use this OpsManager to ingest services tiles and generate BOSH manifests that CF Dev can use during its lifecycle.

Tip

Linuxkit (the boot EFI ISO builder) is special in that it builds based of a YAML definition of container images.

Performance¶

Reducing the memory and footprint is the #2 priority of CF Dev and the biggest issue hindering adoption of the product from our users. The #1 priority is keeping the product maintainable. Balancing the two priorities have always been the engineering and product challenge that our offering faces. There are a couple primary architecture decisions that contribute to our current performance.

-

Cached State: CF Dev speeds up the start up process by trying to cache as much as possible. Knowing that the BOSH lifecycle is happening under the hood of CF Dev provisioning, that means that steps of

upload-stemcell,upload-release,compile-release,create-vm, etc. are performed as part of the lifecycle. CF Dev circumvents many of these steps by taking advantage of the stateful nature of a packaged VM. One critical asset that ships with CF Dev is the disk to be mounted into the VM (disk.qcow,disk.vhdx). This disk (prepared in our pipelines) contains the working directory of the runc-cpi (/var/lib/cpid) and thus can contain the "persistent disk" of the BOSH Director stored in (/var/lib/cpid/disk/<guid>). This directory will thus contain any uploaded stemcells, and releases that the director should know about.

Thus when CF Dev is started at runtime with the existing VM disk, the BOSH director container is stood up but with the existing/var/lib/cpid/disk/<guid>location mounted in. When the BOSH director is up and running, it will report that its stemcells and releases are already uploaded, and thus can immediately skip to thecreate-vmpart of the lifecycle. -

Parallel Deployments: When CF Dev performs its BOSH deployment steps, it attempts to do so in parallel. This means removing

canariesand increasingmax-in-flight. In some cases this is easy like in the case of cf-deployment, which provides a fast-deploy operations file. In most of the cases though, that means writing our own operations files for the same behavior.

Platform Differences¶

Although the overall lifecycle is the same (stand up VM, perform BOSH deployments), there are key differences between the implementations across the different OS platforms. The following will try to illuminate these as well as provide the rational for why they differ. For the most part, the goal was to emulate Docker Machine's architecture as much as possible: linuxkit, vpnkit.

Darwin¶

The VM driver used for the darwin platform is known as hyperkit. We use the linuxkit tool to wrap the hyperkit binary, but we had to fork it because (at the time) the linuxkit tool had no support .qcow2 disks. We needed support for .qcow2 because the Mac versions <10.13 could only support disk types in that format. The fork can be found here: https://github.com/pcfdev-forks/linuxkit/commits/qcow2.

The networking component used for the darwin platform is known as vpnkit. Vpnkit seemed promising because, docker was using it, and because it offered VM proxy support for cheap. Moreover, it would allow us to bring up the VM without having to run it as root. However, to use it (and still have support for something that honestly escapes me, I think it was forwarding privileged ports) we need to have a counterpart service running as root known as cfdevd. Vpnkit also had to be forked to allow for use with our custom cfdevd service.

VM and Networking Drivers

Hyperkit/vpnkit (from Docker)

Service Runner

Self-written abstraction that wraps launchctl on Mac: https://github.com/cloudfoundry-incubator/cfdev/blob/master/daemon/launchd_darwin.go

Windows¶

The VM driver used for the windows platform is known as HyperV. Since HyperV only supports disks as vhd/vhdx, we have to ship a disk in that format for it. One notable difference was not using linuxkit to wrap our interactions with HyperV as was done on darwin. The truth of the reason for this inconsistency was simply because using linuxkit and having to fork it was a divisive decision from the start. There was some turnover at the time Windows support began, so it was a chance for those opposed to get their way.

The networking component used for the windows platform is also vpnkit. Vpnkit offered a similar networking interface as on the darwin platform. A key difference though is that cfdevd is not needed in this case and thus no vpnkit fork was necessary. We did not build our own vpnkit for Windows, but instead swiped the pre-existing version from the moby CI pipelines.

VM and Networking Drivers

HyperV/vpnkit

Service Runner

Linux¶

The VM driver used for the linux platform is known as KVM. KVM supports qcow2 and the linuxkit tool works well with it so they were used for this platform.

The networking component used for the linux platform is a conventional tap device. Vpnkit had a no solution offered or tested for the linux platform. At the time of implementing Linux support, CF Dev had dwindled down to one engineer: Getting something that worked to match feature parity was a bigger priority than reverse-engineer the poorly documented vpnkit tool.

VM and Networking Drivers

KVM/tap-device

Service Runner

kardianos/service: https://github.com/kardianos/service